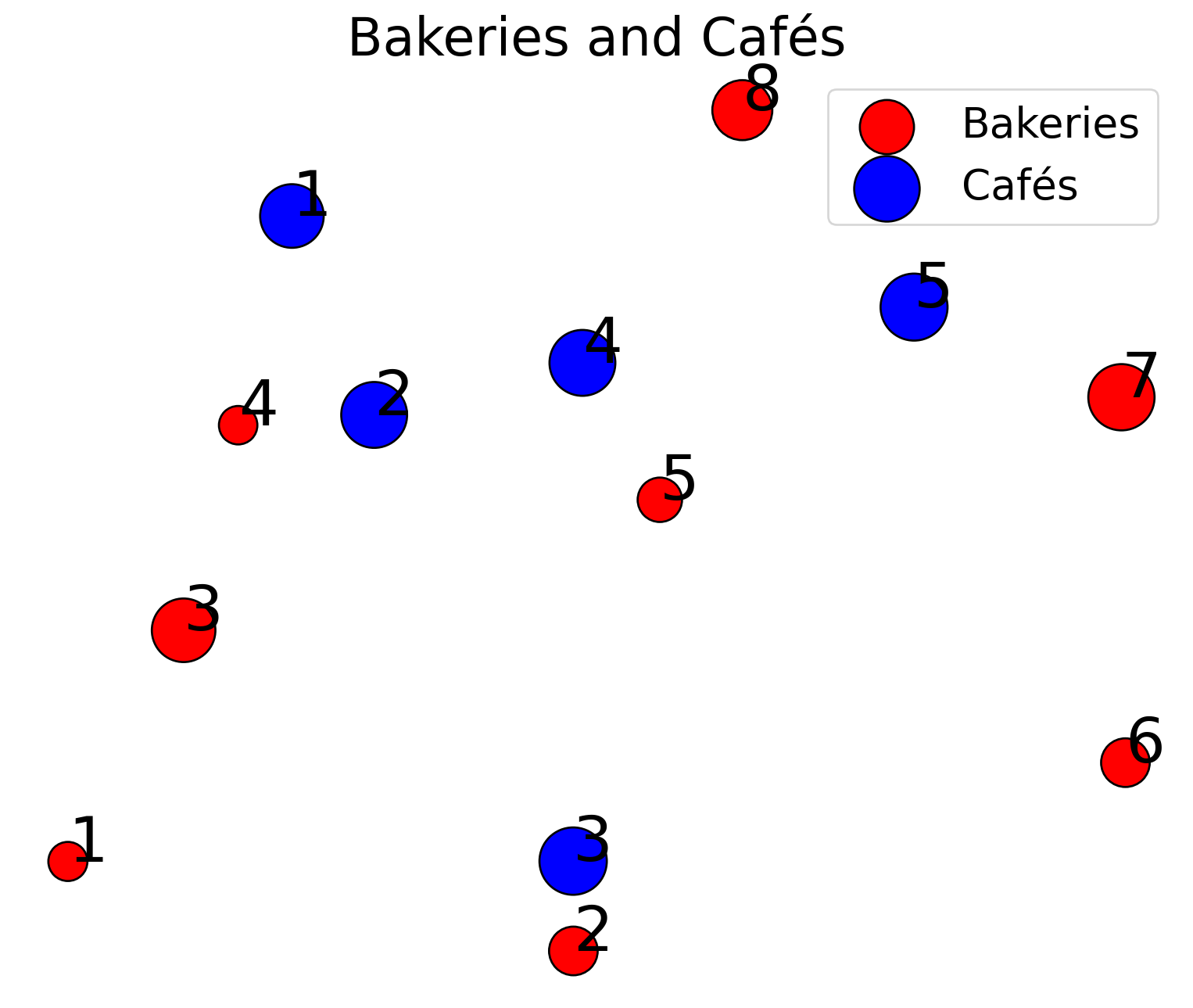

def plot_weighted_points(

ax,

x, a,

y, b,

title=None, x_label=None, y_label=None

):

ax.scatter(x[:,0], x[:,1], s=5000*a, c='r', edgecolors='k', label=x_label)

ax.scatter(y[:,0], y[:,1], s=5000*b, c='b', edgecolors='k', label=y_label)

for i in range(np.shape(x)[0]):

ax.annotate(str(i+1), (x[i,0], x[i,1]),fontsize=30,color='black')

for i in range(np.shape(y)[0]):

ax.annotate(str(i+1), (y[i,0], y[i,1]),fontsize=30,color='black')

if x_label is not None or y_label is not None:

ax.legend(fontsize=20)

ax.axis('off')

ax.set_title(title, fontsize=25)

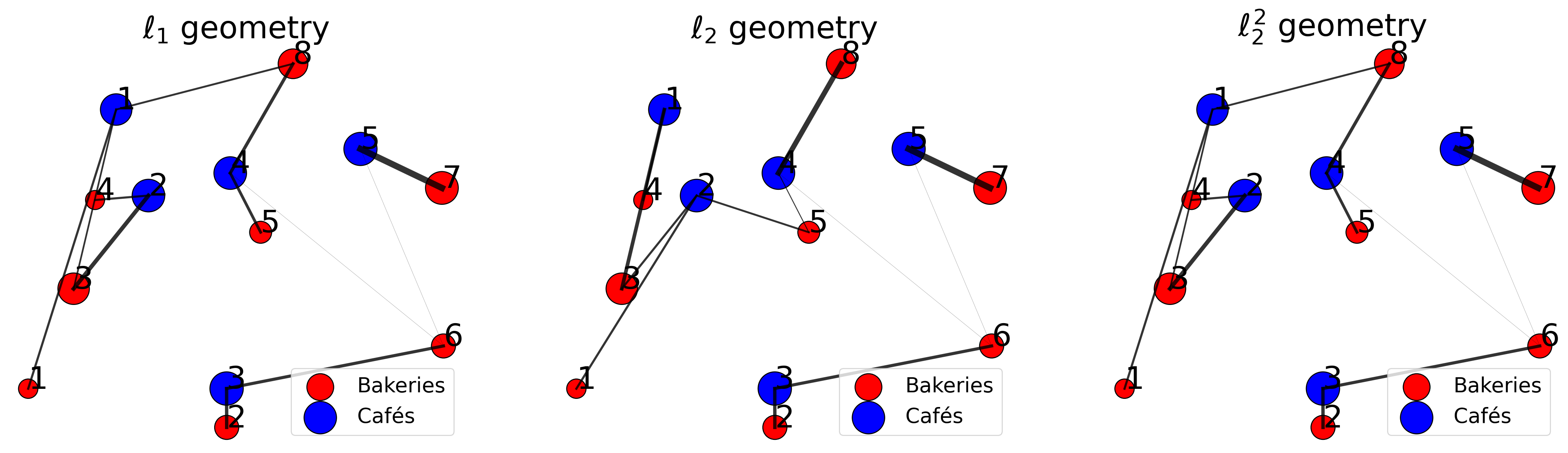

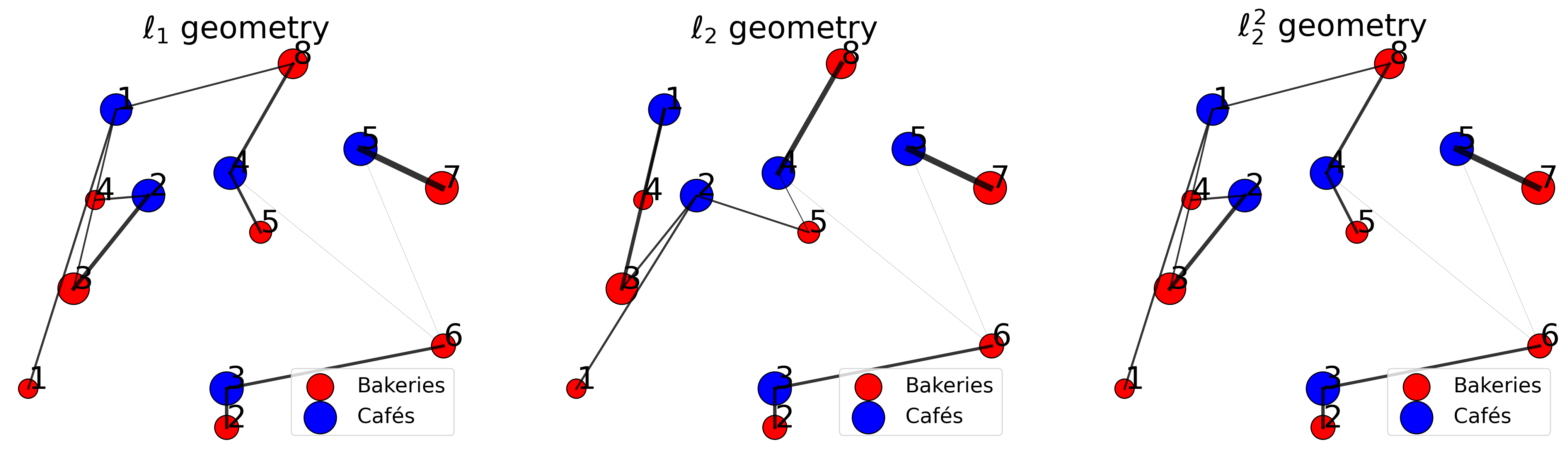

def plot_assignement(

ax,

x, a,

y, b,

optimal_plan,

title=None, x_label=None, y_label=None

):

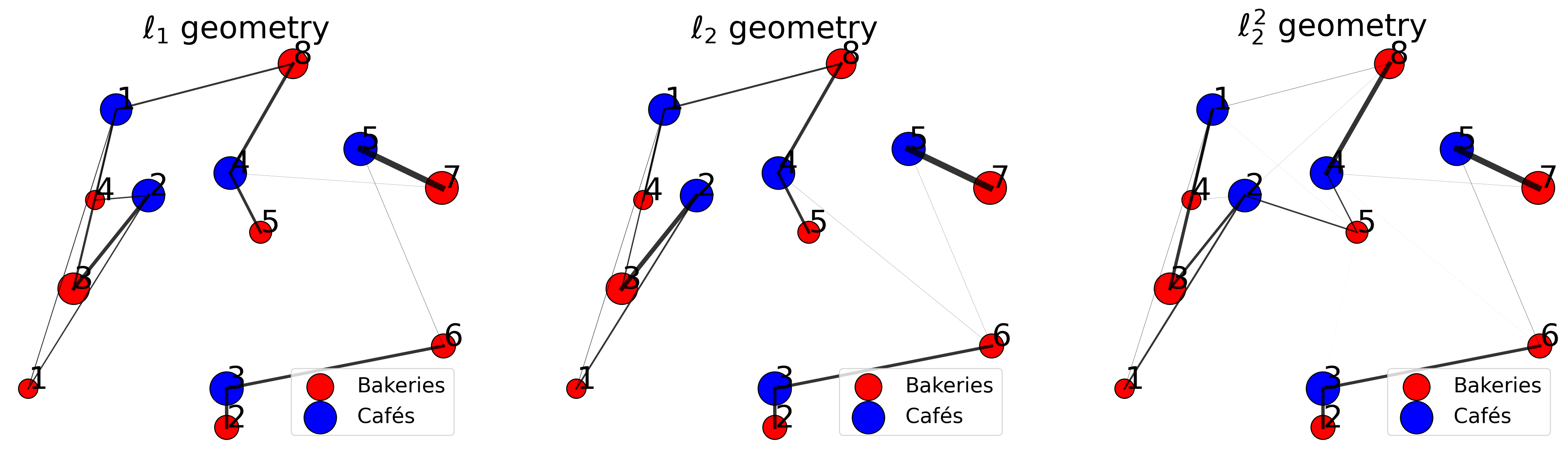

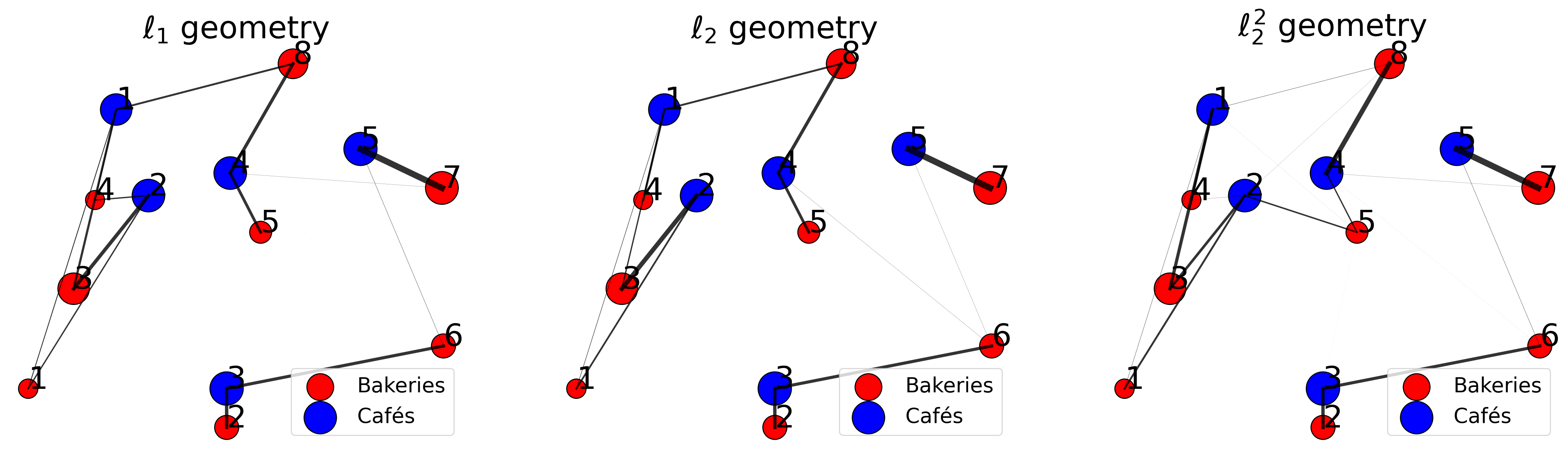

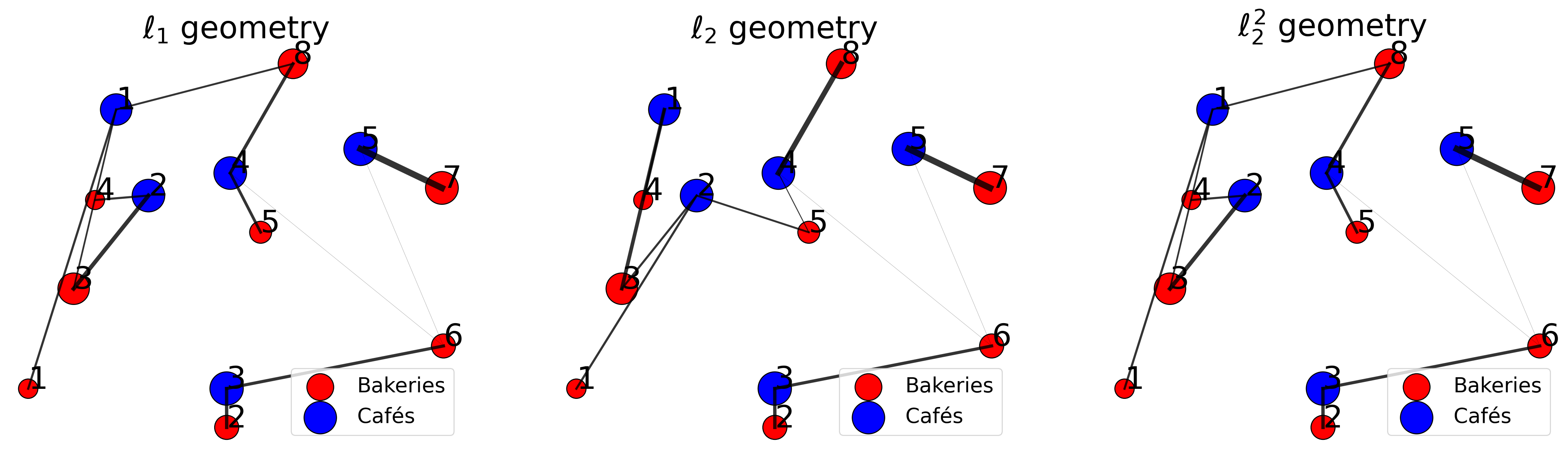

plot_weighted_points(

ax=ax,

x=x, a=a,

y=y, b=b,

title=None,

x_label=x_label, y_label=y_label

)

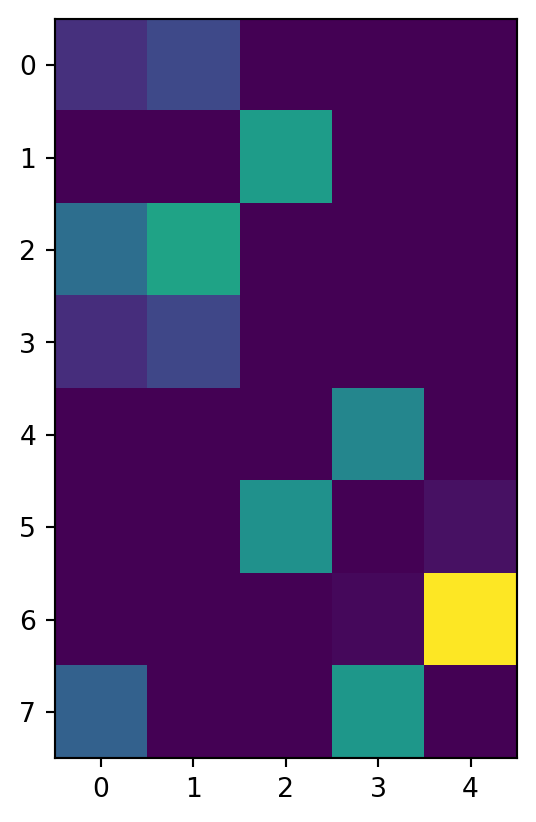

for i in range(optimal_plan.shape[0]):

for j in range(optimal_plan.shape[1]):

ax.plot([x[i,0], y[j,0]], [x[i,1], y[j,1]], c='k', lw=30*optimal_plan[i,j], alpha=0.8)

ax.axis('off')

ax.set_title(title, fontsize=30)

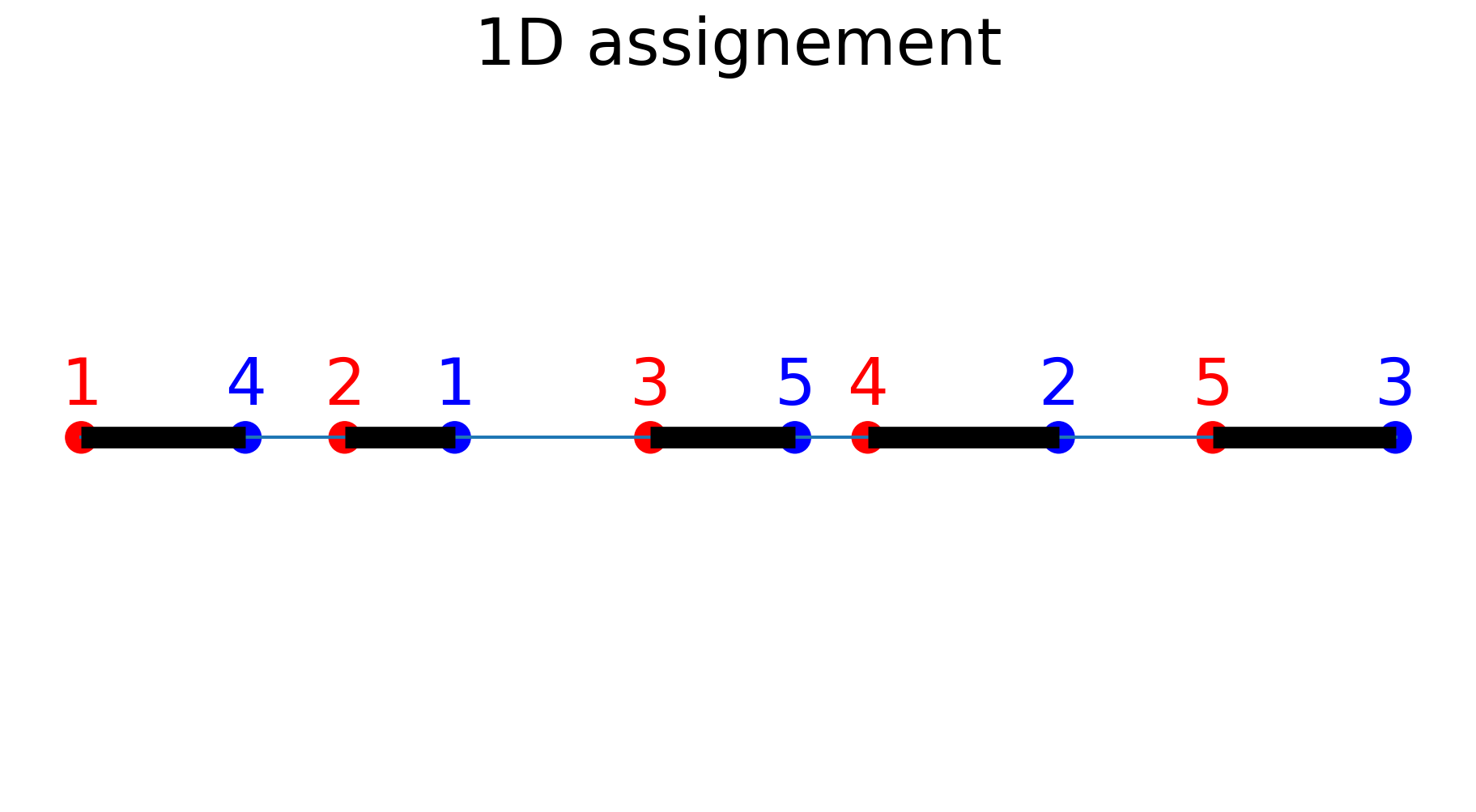

def plot_assignement_1D(

ax,

x, y,

title=None

):

plot_points_1D(

ax,

x, y,

title=None

)

x_sorted = np.sort(x)

y_sorted = np.sort(y)

assert len(x) == len(y), "x and y must have the same shape."

for i in range(len(x)):

ax.hlines(

y=0,

xmin=min(x_sorted[i], y_sorted[i]),

xmax=max(x_sorted[i], y_sorted[i]),

color='k',

lw=10

)

ax.axis('off')

ax.set_title(title, fontsize=30)

def plot_points_1D(

ax,

x, y,

title=None

):

n = len(x)

a = np.ones(n) / n

ax.scatter(x, np.zeros(n), s=1000*a, c='r')

ax.scatter(y, np.zeros(n), s=1000*b, c='b')

min_val = min(np.min(x), np.min(y))

max_val = max(np.max(x), np.max(y))

for i in range(n):

ax.annotate(str(i+1), xy=(x[i], 0.005), size=30, color='r', ha='center')

for j in range(n):

ax.annotate(str(j+1), xy=(y[j], 0.005), size=30, color='b', ha='center')

ax.axis('off')

ax.plot(np.linspace(min_val, max_val, 10), np.zeros(10))

ax.set_title(title, fontsize=30)

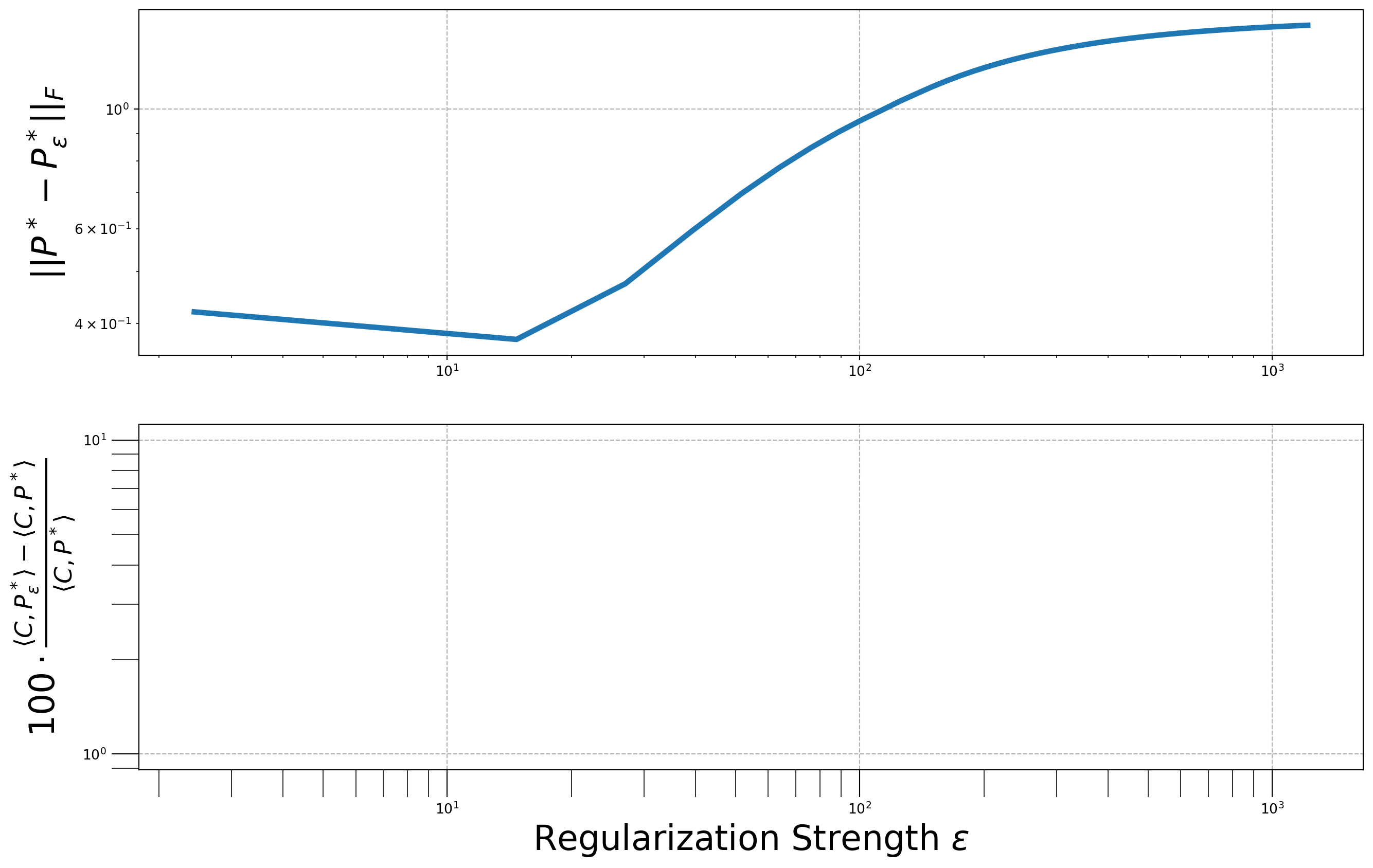

def plot_consistency(

ax,

reg_strengths,

plan_diff, distance_diff

):

ax[0].loglog(reg_strengths, plan_diff, lw=4)

ax[0].set_ylabel('$||P^* - P_\epsilon^*||_F$', fontsize=25)

ax[1].tick_params(which='both', size=20)

ax[0].grid(ls='--')

ax[1].loglog(reg_strengths, distance_diff, lw=4)

ax[1].set_xlabel('Regularization Strength $\epsilon$', fontsize=25)

ax[1].set_ylabel(r'$ 100 \cdot \frac{\langle C, P^*_\epsilon \rangle - \langle C, P^* \rangle}{\langle C, P^* \rangle} $', fontsize=25)

ax[1].tick_params(which='both', size=20)

ax[1].grid(ls='--')