A Blog Entry on Bayesian Computation by an Applied Mathematician

$$

$$

1 Problem

1.1 Starting Point

As a starting point, consider a simple univariate linear regression model with 3 parameters, i.e., \(\alpha,\beta,\sigma^2\): \[ \operatorname{E}[Y|X]=\alpha+\beta X+\epsilon,\qquad\epsilon\sim N(0,\sigma^2). \tag{1}\] Since (1) is just another expression for \(Y|X\sim N(\alpha+\beta X,\sigma^2)\), the likelihood function is given by \[ p(y|\alpha,\beta,\sigma)=\phi(y|\alpha+\beta x,\sigma^2), \] given data \(x\in\mathbb{R}\), where \(\phi(-|\mu,\sigma^2)\) represents the Gaussian density for \(N(\mu,\sigma^2)\).

1.2 Hierarchical Model

To make our model (1) more realistic, let’s say, we would like to model variation in the intercept \(\alpha\) by imposing another regression structure (you might call it super-population structure): \[ \alpha=\mu_\alpha+\epsilon_\alpha,\qquad\epsilon_\alpha\sim N(0,\sigma_\alpha^2). \tag{2}\]

Now, what does the likelihood function \(p(y|\mu_\alpha,\sigma_\alpha,\beta,\sigma)\) of this model look like?

The answer is normal likelihood.

1.3 Likelihood Calculation

First, rewrite (1) and (2) as \[ Y|X,\alpha\sim N(\alpha+\beta X,\sigma^2), \] \[ \alpha|\mu_\alpha,\sigma_\alpha\sim N(\mu_\alpha,\sigma_\alpha^2). \]

Using the formula (note this is not always true, see セクション 2.4) \[ p(y|\mu_\alpha,\sigma_\alpha,\beta,\sigma)=\int_\mathbb{R}p(y|\alpha,\beta,\sigma)p(\alpha|\mu_\alpha,\sigma_\alpha)\,d\alpha, \] we get a normal density \[ p(y|\mu_\alpha,\sigma_\alpha,\beta,\sigma)=\phi(y|\widetilde{\mu},\widetilde{\sigma}^2), \] where \[ \widetilde{\mu}(\beta,\mu_\alpha):=\beta x+\mu_\alpha, \] \[ \widetilde{\sigma}^2(\sigma,\sigma_\alpha):=\sigma^2+\sigma_\alpha^2. \]

This also follows from the reproducing property of normal distribution: \[ N(\beta X,\sigma^2)*N(\mu_\alpha,\sigma_\alpha^2)=N(\beta X+\mu_\alpha,\sigma^2+\sigma_\alpha^2). \]

2 Background in Probability

2.1 Core Proposition

Abstracting away by setting \[ \theta:=(\beta,\sigma),\qquad\varphi:=(\mu_\alpha,\sigma_\alpha), \] the core identity was the following: \[ p(y|\theta,\varphi)=\int p(y|\theta,\alpha)p(\alpha|\varphi)\,d\alpha. \tag{3}\]

Having such a good notation, this formula might look obvious for stats people, but how actually do we prove it?

2.2 Conditional Probability

Essentially, 式 3 comes from the tower property of conditional expectation:

Let’s see how we apply this tower property to our problem:

Concerning the definition of conditional probability, we first have the definition of conditional expectation, through which we define conditional probability as1 \[ \operatorname{P}[A|X]:=\operatorname{E}[1_A|X]. \]

2.3 Conditional Density

The last piece we need is the definition of conditional density.

Plugging in this characterization into (4), we get \[ \operatorname{P}[A|X=x]=\int_{\mathbb{R}^d}\operatorname{P}[A|X=x,Y=y]\,p(y|x)\,dy. \] Denoting the density of \(\operatorname{P}[A|X=x]\) as \(p(a|x)\), we have \[ p(a|x)=\int_{\mathbb{R}^d}p(a|x,y)\,p(y|x)\,dy. \]

2.4 Last Piece

Reterning to the notation in (3), we have \[ p(y|\theta,\varphi)=\int p(y|\theta,\varphi,\alpha)p(\alpha|\varphi)\,d\alpha. \]

This is slightly different from (3) in that there is \(p(y|\theta,\varphi,\alpha)\) instead of \(p(y|\theta,\alpha)\).

So the last piece we need is the modeling assumption in the regression setting of (1), which is conditional independence between \(Y\) and the hyperparameters \((\mu_\alpha,\sigma_\alpha)\) given \(\alpha\): \[ Y\perp\!\!\!\perp\varphi\mid\alpha. \]

Under this assumption, we have2 \[ p(y|\theta,\alpha,\varphi)=p(y|\theta,\alpha). \]

In the regression setting of (1), \[ p(y|\beta,\sigma,\alpha,\mu_\alpha,\sigma_\alpha)=p(y|\beta,\sigma,\alpha). \]

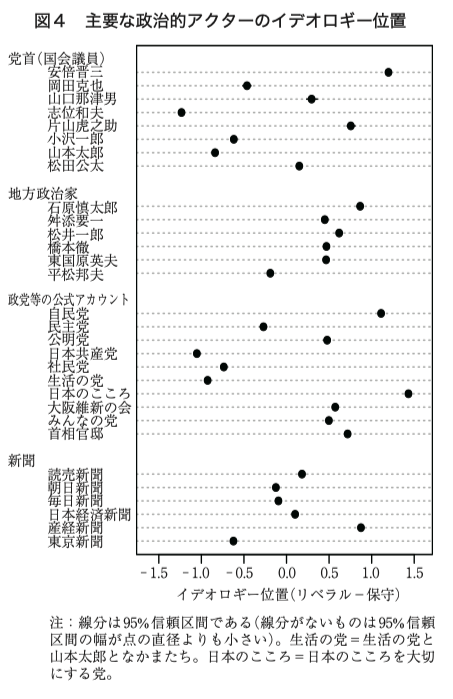

3 Real-world Example: Ideal Point Model

3.1 Specification

Let’s consider the following hierarchical model with 6 parameters, i.e., \(\alpha,\beta,\gamma,\delta,\sigma^2\) and \(X\): \[ Y\sim\operatorname{Bernoulli}(\mu), \] \[ \operatorname{logit}(\mu)=\alpha+\beta X, \] \[ X=\gamma+\delta Z+\epsilon,\qquad\epsilon\sim N(0,\sigma^2). \]

We see the hierarchical structure \[ Y|X\sim\operatorname{Bernoulli}(\operatorname{logit}^{-1}(\alpha+\beta X)), \] \[ X|Z\sim N(\gamma+\delta Z,\sigma^2), \] just as in セクション 1.3.

This time, however, we cannot detour the calculation by the tower property, while in セクション 1.3 we could sanity check the result by the reproducing property of normal distribution.

3.2 Likelihood Calculation

Through the core 式 3,

\[\begin{align*} p(y|\alpha,\beta,\gamma,\delta,\sigma)&=\int_\mathbb{R}p(y|\alpha,\beta,x)p(x|\gamma,\delta,\sigma)\,dx\\ &=1_{\left\{1\right\}}(y)\int_\mathbb{R}\operatorname{logit}^{-1}(\alpha+\beta x)\phi(x|\gamma+\delta z,\sigma)\,dx\\ &\qquad+1_{\left\{0\right\}}(y)\int_\mathbb{R}\biggr(1-\operatorname{logit}^{-1}(\alpha+\beta x)\biggl)\phi(x|\gamma+\delta z,\sigma)\,dx\\ &=\qquad\cdots\cdots \end{align*}\]

We won’t proceed any more, observing the integral is not always tractable.

If the likelihood involves an intractable integral, how can we proceed maximum likelihood / Bayesian estimation?

3.3 Data Augmentation

We can still find the mode of the likelihood function by the EM algorithm (Dempster ほか, 1977).

The case is similar in the Bayesian approach, where we enlarge the parameter space by treating \(x\) as a parameter (or a latent variable) and sample from \(x\) as well.

This is called data augmentation approach, initiated in the EM algorithm, later applied to Bayesian computations in (Tanner と Wong, 1987).

Although \(p(y|\alpha,\beta,\gamma,\delta,\sigma)\) might involve possibly intractable integrals over \(x\), \(p(y|\textcolor{red}{x},\alpha,\beta,\gamma,\delta,\sigma)\) does not, since it holds that \[ p(y|x,\alpha,\beta,\gamma,\delta,\sigma)=p(y|x,\alpha,\beta) \] given that \(y\) and \(\gamma,\delta,\sigma\) are conditionally independent given \(x\).

Therefore, the posterior distribution of \((x,\alpha,\beta,\gamma,\delta,\sigma)\) is given by

\[ p(x,\alpha,\beta,\gamma,\delta,\sigma|y)\,\propto\,p(y|x,\alpha,\beta,\gamma,\delta,\sigma)p(x,\alpha,\beta,\gamma,\delta,\sigma) \] \[ =p(y|x,\alpha,\beta)p(x|\gamma,\delta,\sigma)p(\alpha,\beta,\gamma,\delta,\sigma). \tag{5}\]

Basically, higher level parameters \(\gamma,\delta,\sigma\) are treated as a part of the machinery that systematically defines a prior for the lower level parameter \(x\). In fact, this was the motivation when the hierarchical structure is first introduced in (Lindley と Smith, 1972).

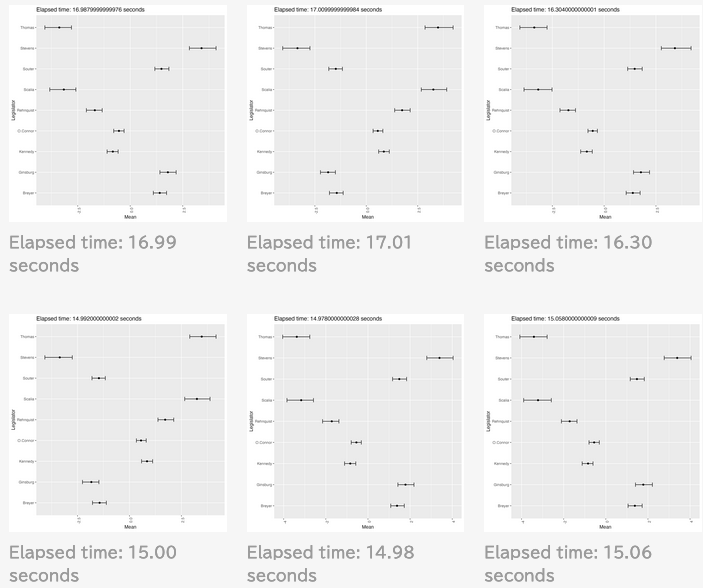

3.4 Bayesian Computation

Using the decomposition (5), we can readily run MCMC algorithms to sample from the posterior distribution.

Introducing another latent variable, we may also run the Gibbs sampler based on Polya-Gamma decomposition as in (Polson ほか, 2013).

Alternatively, we can run the HMC (Hamiltonian Monte Carlo) algorithm based on the gradient of the log posterior density.

In the following articles, we prepared you with the codes for gradient-based Bayesian estimation, including HMCs via Stan and Zig-Zag via PDMPFlux. Visit the following articles (although they are in Japanese) for more details:

参考文献

脚注

For this definition of conditional probability, see (Kallenberg, 2021, p. 167), (Dudley, 2002, p. 347). If we are to define conditional probability first, we need the concept of regular conditional probability, which is not discussed here.↩︎

see, for example, (Th’m 8.9 Kallenberg, 2021, p. 170)↩︎

引用

@online{shiba2024,

author = {Shiba, Hirofumi},

title = {Likelihood of Hierarchical Models},

date = {2024-12-23},

url = {https://162348.github.io/posts/2024/Probability/likelihood.html},

langid = {ja},

abstract = {We examine how to find \& formally determine the

likelihood function of hierarchical models. As a real-world example,

we consider the ideal point model, also known as the 2-parameter

logistic item response model.}

}