関連ページ

A Blog Entry on Bayesian Computation by an Applied Mathematician

$$

$$

1 半正定値カーネル

1.1 はじめに

カーネル法は,カーネルの選択と構成が第一歩になる.

例えば Gauss 過程 は,平均関数と共分散関数=正定値カーネルを定めるごとに定まる.従って Gauss 過程回帰などを実行する前には,適切な事前 Gauss 過程を定める半正定値カーネルを選ぶ必要がある.

1.2 定常カーネル

距離空間 \((T,d)\) 上の Gauss 過程 \((X_t)\) が定常的である場合,共分散関数 \[ \mathrm{C}(s,t):=\operatorname{E}\biggl[(X_s-\operatorname{E}[X_s])(X_t-\operatorname{E}[X_t])\biggr],\qquad s,t\in T \] は距離 \(d(s,t)\) のみの関数になる.

このような半正定値関数 \(\mathrm{C}\) の例を \(T=\mathbb{R}^d\) として挙げる.

1.2.1 Poisson 核

1.2.2 Gauss 核

Radial Basis Function とは動径 \(r=\lvert x\rvert\) の関数であることをいう.RBF カーネルと言ったとき特に Gauss 核を指すことが多いが,これは混乱を招く.(Murphy, 2023) では Squared Exponential kernel の語が使われているが,ここでは Gauss 核と呼ぶ.

1.2.3 関連度自動決定核 (ARD)

そもそも関連度自動決定 (MacKay, 1994), (Neal, 1996, p. 16) またはスパースベイズ学習 (Tipping, 2001) とは,ニューラルネットワークの最初のレイヤーの荷重をスパースにするために分散不定の正規分布を事前分布として導入する,という技法である (Loeliger et al., 2016).

一般に出力をスパースにするためのフレームワークとしても活用され,ARD 核はその最たる例である.

1.2.4 Matérn 核

ARD 核は軟化性能を持つため,見本道は無限回微分可能になってしまう.

これが不適な状況下では,Matérn 核 \[ K(r;\nu,\ell)=\frac{2^{1-\nu}}{\Gamma(\nu)}\left(\frac{\sqrt{2\nu}r}{\ell}\right)^\nu K_\nu\left(\frac{\sqrt{2\nu}r}{\ell}\right) \] などが用いられることがある.ただし,\(K_\nu\) は修正 Bessel 関数とする.

\(\nu\) は滑らか度を決定し,見本道は \(\lfloor\nu\rfloor\) 階 \(L^2\)-微分可能になる.\(\nu\to\infty\) の極限で Gauss 核に収束する.

\(\nu=1/2\) の場合 \[ K(r;1/2,\ell)=\exp\left(-\frac{r}{\ell}\right) \] であり,対応する Gauss 過程は Ornstein-Uhlenbeck 過程 である.

1.2.5 定常スペクトル核

任意の(定常な)正定値関数は,ある関数 \(p\) に関して \[ K(r)=\int_{\mathbb{R}^d}p(\omega)e^{i\omega^\top r}\,d\omega \tag{1}\] と表せる.この \(p\) は スペクトル密度 という.

\(K\) が RBF 核であるとき,\(p\) もそうなる: \[ p(\omega)=\sqrt{2\pi\ell^2}\exp\biggr(-2\pi^2\omega^2\ell^2\biggl). \]

この対応を用いて,スペクトル密度 \(p\) をデザインすることで,様々な正定値カーネルを得ることが出来る.

例えば spectral mixture kernel (Wilson and Adams, 2013) では,スケール母数と位置母数とについて RBF 核の混合を考えることで,新たな正定値カーネルを構成する.

1.3 非定常カーネル

環境統計学などにおいて,空間相関の仕方が時間的に変化していくという設定がよくある.

このような場合は,一般の2変数の半正定値カーネル関数を考えることが有用である.

1.3.1 多項式核

\[ K(x,y)=(x^\top y+c)^M \] は非斉次項 \(c\) を持つ,\(M\) 次の多項式核と呼ばれる.

1.3.2 Gibbs 核

Gibbs 核 (Gibbs, 1997) は,ハイパーパラメータ \(\sigma,\ell\) を入力に依存するようにした RBF 核である: \[ K(x,y)=\sigma(x)\sigma(y)\sqrt{\frac{2\ell(x)\ell(y)}{\ell(x)^2+\ell(y)^2}}\exp\left(-\frac{\lvert x-y\rvert^2}{\ell(x)^2+\ell(y)^2}\right). \]

このようにすることで,\(\sigma,\ell\) を別の Gauss 過程でモデリングし,階層モデルを考えることもできる (Heinonen et al., 2016).

1.3.3 スペクトル核 (Remes et al., 2017)

正定値核は Fourier 変換を通じて,スペクトル密度によって指定することもできる(Bochner の定理).

この手法は,非定常核に対しても (Remes et al., 2017) が拡張している.

1.4 位相空間上の核

文章上の string kernel (Lodhi et al., 2002) やグラフ上の graph kernel (Kriege et al., 2020) も考えられている.

1.4.1 乱歩核

(Borgwardt et al., 2006) は random walk kernel を提案しており,\(\mathbb{R}^d\) へ埋め込まれるようなものの計算量は \(O(n^3d)\) である.

1.4.2 Weisfeiler-Lehman 核

さらに効率の良いカーネルとして Weisfeiler-Lehman グラフカーネル (Shervashidze et al., 2011) もある.

1.5 核の構成

1.5.1 半正定値核のなす正錐

半正定値核は \(\mathrm{Map}(T^2,\mathbb{R})\) 上で閉凸錐をなす.すなわち, \[ c_1K_1+c_2K_2,\qquad c_1,c_2\ge0, \] とその各点収束極限は再び半正定値核である.

1.5.2 半正定値構成

1.5.3 核の押し出し

\(S^1\simeq[0,2\pi)\) 上の確率分布は,方向データとして,海洋学における波の方向,気象学における風向のモデリングに応用を持つ.

全射 \(\pi:\mathbb{R}\twoheadrightarrow S^1\) に従って,\(\mathbb{R}\)-値の Gauss 過程を,方向データ値の Gauss 過程に押し出すことが出来る (Jona-Lasinio et al., 2012).

これに伴い,\(\mathbb{R}\)-値の核 \(K:\mathbb{R}\to\mathcal{P}(\mathbb{R})\) を \(S^1\)-値に押し出すこともできる: \[ \pi_*K:\mathbb{R}\to\mathcal{P}(\mathbb{R})\xrightarrow{\pi_*}\mathcal{P}(S^1). \]

\(\pi\) による Gauss 分布の押し出し \(\pi_*\operatorname{N}_1(\mu,\sigma^2)\) は wrapped normal distribution と呼ばれている.これに対応し,この Gauss 過程は wrapped Gaussian process と呼ばれている (Jona-Lasinio et al., 2012).

1.6 核の Monte Carlo 近似

1.6.1 カーネルの近似

以上,種々のカーネル関数を紹介してきたが,これらはデータに関して効率的に計算される必要がある.

特に潜在空間上での Gram 行列の逆行列または Cholesky 分解を計算する \(O(n^3)\) の複雑性が難点である (Liu et al., 2020).

このデータ数 \(n\) に関してスケールしない点が従来カーネル法の難点とされてきたが,これはランダムなカーネル関数を用いた Monte Carlo 近似によって高速化できる.\(m\) 個のランダムに選択された基底関数を用いれば,Monte Carlo 誤差を許して計算量は \(O(nm+m^3)\) にまで圧縮できる.

1.6.2 Random Fourier Features

正定値核のスペクトル表現 (1) を通じて,核の値 \(K(x,y)\) を Monte Carlo 近似をすることが出来る.

例えば \(K\) が RBF 核であるとき,\(p\) は正規密度になるから,Gauss 確率変数からのサンプリングを通じてこれを実現できる: \[ K(x,y)\approx\phi(x)^\top\phi(y),\qquad \phi(x):=\sqrt{\frac{1}{D}}\begin{pmatrix}\sin(Z^\top x)\\\cos(Z^\top x)\end{pmatrix},Z=(z_{ij}),z_{ij}\overset{\text{i.i.d.}}{\sim}\operatorname{N}(0,\sigma^{-2}). \]

これは核の値 \(K(x,y)\) を,逆に(ランダムに定まる)特徴ベクトル \(\phi(x),\phi(y)\) の値を通じて計算しているため,Random Fourier Features (Rahimi and Recht, 2007), (Sutherland and Schneider, 2015),または Random Kitchen Sinks (Rahimi and Recht, 2008) と呼ばれる.

\(Z\) の行を互いに直交するように取ることで,Monte Carlo 推定の精度が上がる.これを orthogonal random features (Yu et al., 2016) と呼ぶ.

2 距離学習

2.1 はじめに

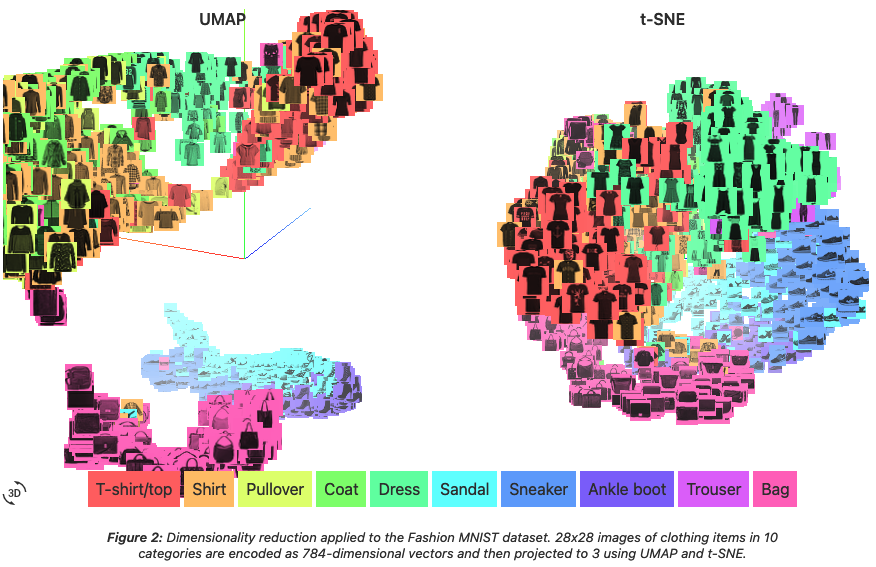

2つのデータ点 \(x_1,x_2\in\mathcal{X}\) に対して,その意味論的な距離 \(d(x_1,x_2)\) を学習することを考える.

これはある種の表現学習として,分類,クラスタリング,次元縮約 などの事前タスクとしても重要である.顔認識など,computer vision への応用が大きい.

古典的には,\(K\)-近傍分類器と対置させ,これが最大の精度を発揮するような距離を学習することが考えられる

また,ニューラルネットワークにより埋め込み \(f:\mathcal{X}\hookrightarrow\mathbb{R}^d\) を構成し,その後 \(\mathbb{R}^d\) 上の Euclid 距離を \(d\) として用いるとき,これを 深層距離学習 (deep metric learning) という.

深層距離学習では距離学習自体が下流タスクとなっており,その性能が深層埋め込み \(f\) に依存している.実際,深層距離学習の性能は芳しいと言えないことが知られている (Musgrave et al., 2020).

2.2 \(K\)-近傍分類

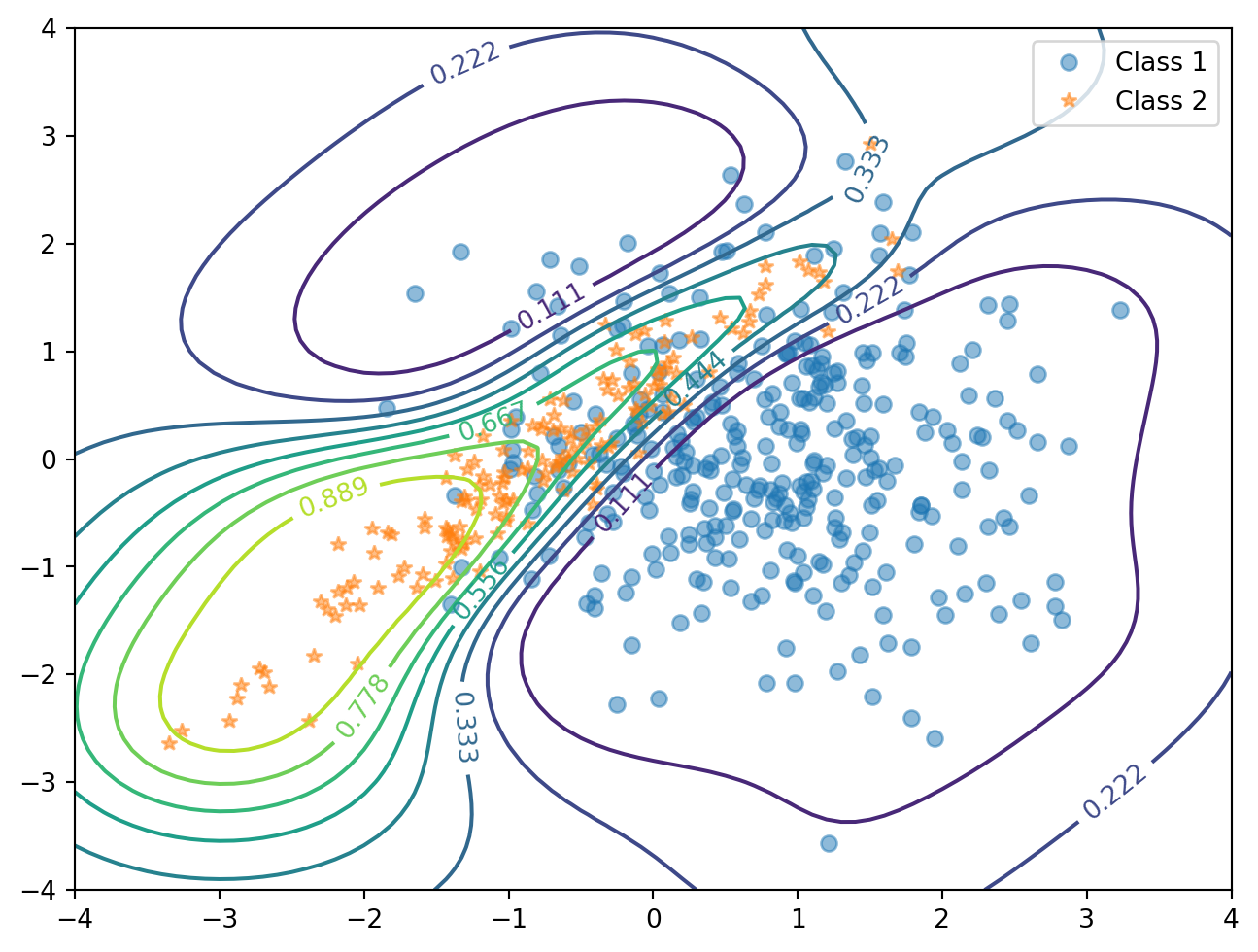

ラベル付きデータ \(\mathcal{D}=\{(x_i,y_i)\}\subset\mathcal{X}\times[C]\) が与えられているとする.

\(K\)-近傍分類法は,「\(x\) の近傍上位 \(K\) 個のデータに訊いてみる」という方法であり,こうして得る事後確率 \[ p(y=c|x,\mathcal{D})=\frac{1}{K}\sum_{i\in\mathcal{D}_K(x)}1_{\left\{y_i=c\right\}} \] から \(x\) のラベルを予測する.

この事後分布をさらにクラスタリングに用いたものが \(K\)-平均法 (MacQueen, 1967), (Lloyd, 1982) である

\(K\)-近傍法はそのシンプルな発想に拘らず一致性と,良い収束レートを持つ (Chaudhuri and Dasgupta, 2014).

一様カーネル \[ K(r;\ell):=\frac{1}{2\ell}1_{[0,\ell]}(r) \] が定める密度推定量を,どの

2.3 Mahalanobis 距離の学習

\[ d(x_1,x_2;M):=\sqrt{(x_1-x_2)^\top M(x_1-x_2)} \] というパラメトリックモデルを過程し,\(M\) を学習することを考える.

2.3.1 大マージン最近傍 (LMNN, Kilian Q. Weinberger et al., 2005)

Large margin nearest neighbor (LMNN) (Kilian Q. Weinberger et al., 2005), (Kilian Q. Weinberger and Saul, 2009) は,\(K\)-近傍分類器による後続タスクが最も精度が良くなるように \(M\) を学習する方法をいう.

各データ番号 \(i\in[n]\) に対して,これと似ているデータ番号の集合 \(N_i\subset[n]\) が与えられているとする(ラベルが同一であるデータ点など).これに対して,\(\lambda\in(0,1),m\ge0\) をハイパーパラメータとして, \[ \mathcal{L}(M):=(1-\lambda)\mathcal{L}^-(M)+\lambda\mathcal{L}^+(M),\qquad\lambda\in(0,1), \] \[ \mathcal{L}^-(M):=\sum_{i=1}^n\sum_{j\in N_i}d(x_i,x_j;M)^2,\quad\mathcal{L}^+(M):=\sum_{i=1}^n\sum_{j\in N_i}\sum_{k=1}^N\delta_{ik}\biggr(m+d(x_i,x_j;M)^2-d(x_i,x_k;M)^2\biggl)^2, \] を最小化するように \(M\) を学習する.

\(\mathcal{L}\) は凸関数であるため,半正定値計画法が適用できる.また,\(M:=W^\top W\) によりパラメータ変換をして,\(W\) に関して解くことで,問題の凸性を失う代わりに次元数を削減できる.

2.3.2 近傍成分分析 (NCA, Goldberger et al., 2004)

近傍成分分析 (NCA: Neighborhood Component Analysis) (Goldberger et al., 2004) では \(W\) を学習する.

類似度行列 \(W\) に関して,確率的近傍埋め込み でも使うモデル \[ p_{ij}^W:=\frac{\exp\left(-\lvert Wx_i-Wx_j\rvert^2\right)}{\sum_{k\neq i}\exp\left(-\lvert Wx_i-Wx_k\rvert^2\right)} \] を考える.各 \(i\in[n]\) について,\(x_i\) 以外のデータから \(x_j\) のラベルを \(1\)-近傍分類器で正しく予測する確率が最大になるように, \[ \mathcal{L}(W):=1-\frac{1}{N}J(W),\quad J(W):=\sum_{i=1}^n\sum_{(i,j)\in E}p_{ij}^W \] を最小化するように学習する.ただし,辺の集合 \(E\) は,ラベルの同じデータを結ぶとした.

2.4 深層距離学習

2.4.1 分類に基づく目的関数

深層距離学習では目的関数の設定が重要である.

最も初等的には,自己符号化器などで分類問題を解き,その内部表現(よく最後から2層目を用いる)での Euclid 距離を距離関数に用いる方法がある.

しかし,距離の情報を学習するために,分類タスクは弱すぎるようである.

2.4.2 2者比較に基づく目的関数

\[ \mathcal{L}(\theta;x_i,x_j):=\delta_{y_i,y_j}d(z_i,z_j)^2+(1-\delta_{y_i,y_j})\biggr(m-d(z_i,z_j)^2\biggl)_+,\qquad z_i=f_\theta(x_i) \] という損失関数は 対照的損失 (contrastive loss) (Chopra et al., 2005) と呼ばれる.

この損失はラベル \(y_i,y_j\) が同一のデータ \(x_i,x_j\) の潜在表現の距離を近づけ,ラベルが異なるデータは \(m\) 以上は話すように埋め込み \(f_\theta\) を学習する.

この際に用いるニューラルネットワークは,同時に2つの入力 \(x_i,x_j\) をとって学習することから,双子ネットワーク (Siamese network) とも呼ばれる.

2.4.3 3者比較に基づく目的関数

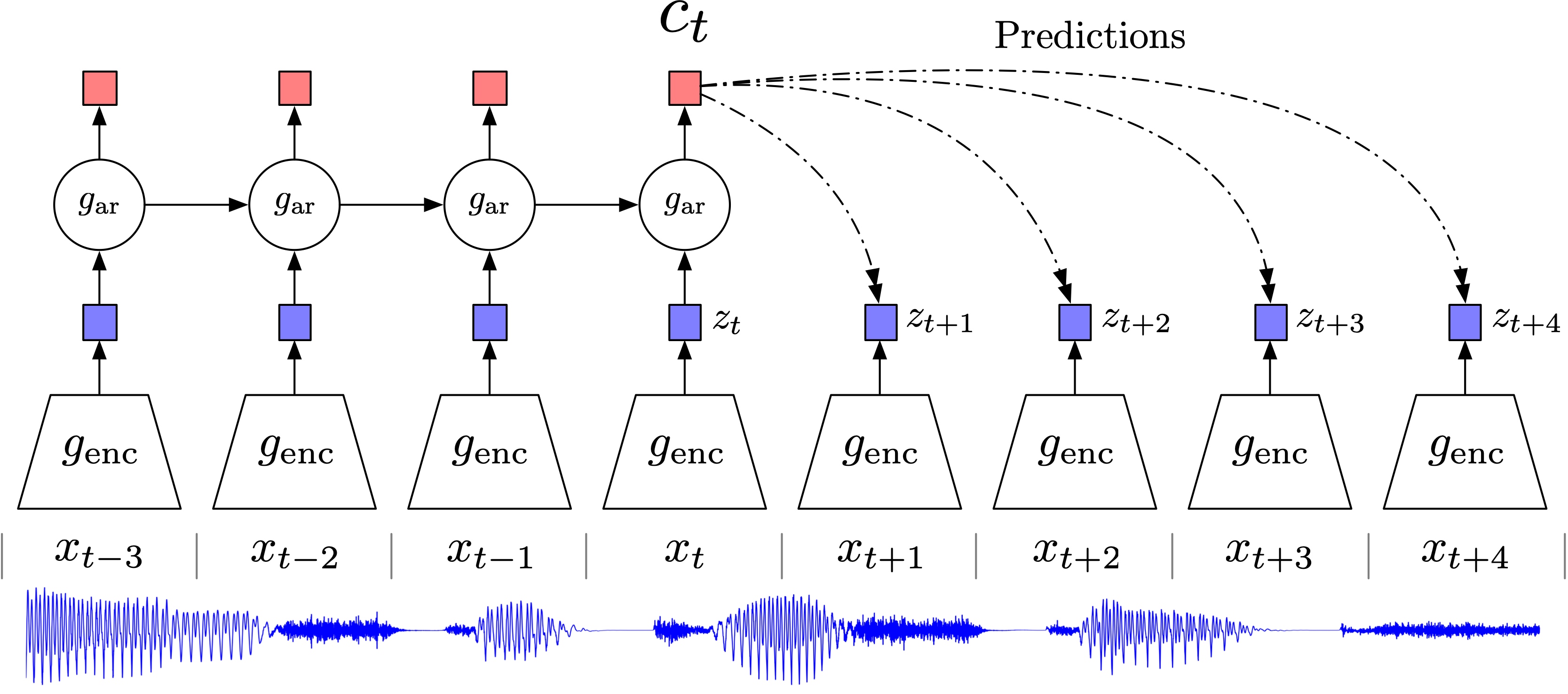

この方法は直ちに三子損失 (triplet loss) (Schroff et al., 2015),\(n\)-ペア損失 (\(n\)-pair loss) (Sohn, 2016), (Oord et al., 2018) に拡張された.

このことにより,\(x_i,x_j\) の「近さ」のスケールと「遠さ」のスケールが一致し,安定した結果が得られる.

三子損失は,各データ \(x_i\) に対して,「似ている」ペア \(x_i^+\) と「似ていない」ペア \(x_i^-\) を事前に選び, \[ \mathcal{L}(\theta;x_i,x_i^+,x_i^-):=\biggr(d_\theta(x_i,x_i^+)^2-d_\theta(x_i,x_i^-)^2+m\biggl)_+,\qquad m\in\mathbb{R} \] と定められる.このとき,\(x_i\) は参照点 (anchor) と呼ばれる.

この方法は \(x_i^+,x_i^-\) を選ばなければいけないが,その分拡張性に優れる.ノイズ対照学習 の稿も参照.

\(n\)-ペア損失では,負のデータ \(x_i^-\) をさらに増やす.これは (Oord et al., 2019 Contrastive Predictive Coding) にて,InfoMax の観点から表現学習に用いられたものと一致する.

2.4.4 3者比較の加速

負の例 \(x_i^-\) を特に情報量が高いもの (hard negatives, Faghri et al., 2018) を選ぶことで,学習を加速させることができる.

これは,3者損失を提案した Google の FaceNet (Schroff et al., 2015) で考えられた戦略である.

クラスラベルが得られる場合,各クラスから代表的なデータを選んでおくことで \(O(n)\) にまで加速できる (Movshovitz-Attias et al., 2017).この代表点は固定して1つに定める必要はなく,ソフトな形で選べる (Qian et al., 2019).

3 終わりに

ここで扱った深層距離学習は,現代的には表現学習として更なる発展を見ている.

References

Footnotes

(Murphy, 2022, p. 565) 17.1節は,半正定値核のことを Mercer 核とも呼んでいる.↩︎

RBF は (持橋大地 and 大羽成征, 2019, p. 68),SE は (Rasmussen and Williams, 2006, p. 14) の用語.(Murphy, 2023) では両方が併記されている.Gaussian kernel とも呼ばれる.↩︎

他のパラメータの入れ方もある.例えば

GPyでの実装 は \(\sigma^2\exp\left(-\frac{r^2}{2}\right)\) を採用している.Fourier 変換や偏微分方程式論の文脈では \(\frac{1}{(4\pi t)^{d/2}}\exp\left(-\frac{r^2}{4}\right)\) も良く用いられる.これは熱方程式の基本解になるためである.↩︎(MacKay, 1994), (Neal, 1996, p. 16) なども参照.↩︎